Recent pretix.eu availability issues

Dear customers,

June has not been a good month for the availability of pretix Hosted. Our system was impacted multiple times for a variety of different reasons. First and foremost we want to deeply apologize that we've let you down. We know that you rely on us for selling and validating your tickets, often a mission-critical part of your business. We take the availability of our service very seriously and want to assure you that we do not take this lightly.

As always, we want to share the details of the incidents with you transparently and publicly to explain how this happened. Also, we want to inform you about the actions we are taking to prevent similar failures in the future, hoping to regain your trust that we'll take good care of your systems.

Events

Incident on 2021-06-05

On Saturday, June 5 from 11:53 to 11:58 CEST our system was almost completely unavailable. Around this time, we deployed a new version of pretix with a new feature we recently built. We usually deploy new versions of pretix multiple times a day, and our process usually does not cause any disruptions in availability.

In this particular instance, our deployment did not only contain a change to the program code, but also to the structure of our database. In these cases, we review changes in much detail and apply them to the system individually to minimize any intermittent problems. Unfortunately, we missed the fact that one of the changes was done in a way that required the database to fully rewrite a large table. After we noticed this, we immediately aborted the change. However, the system needed a few more minutes to recover because the table rewrite caused a large amount of network traffic when our database tried to replicate the changes to our secondary database servers.

Incident on 2021-06-07

On Monday, June 7 from 16:43 to 17:11 CEST our system was significantly slower than usual and approximately 25 % of requests resulted in an error message or timeout.

This incident started at 16:43 with a sudden, significant increase in load on our application layer. We're still not perfectly sure about the reason, but we have reason to suspect that it was in connection with a large increase in demand for a specific customer's ticket shop that caused unusually high CPU load.

Since our monitoring systems are currently optimized to detect full system failures most quickly and the system didn't fully fail but just turned slow and unreliable, it took until 16:55 until our system administration team was aware of the issue. After the problem was identified as a high CPU load on the application layer, our team quickly started scaling our servers to more powerful instances with more CPU cores at 16:59. Performance improved step by step as more servers were upgraded. By 17:11, all application servers were scaled to the highest tier available at our cloud provider. Around the same time, the response error rate dropped back to 0 %.

Since the load remained on a rather high level, we immediately took action the next day and increased the number of server instances by 20 % as well.

Incident on 2021-06-08

On Tuesday, June 8 from 10:35 to 10:50 CEST our system was significantly slower than usual and approximately 50 % of requests resulted in an error message or timeout.

This incident happened for a reason unrelated to the other incidents. Since we anticipated the load on our systems to increase during summer season, we had started moving our system to a new data center to provide more performance and flexibility.

Our system mainly consists of three layers: A load balancer layer (the servers you interact with), an application layer (the servers our software runs on), and a database layer (the servers storing all the data). On May 20, we had moved the database layer as well as the application layer over to our new data center provider. However, to reduce the operational risk and be able to prepare all changes with decent time, we had not yet moved our load balancer layer, thus operating across two data centers.

On June 8, a network outage in the new data center caused the connection between the two data centers to be unreliable causing performance issues and false information about the availability of the application layer in our load balancer.

There wasn't much for us to do immediately except wait for the networking issue to be resolved. However, as soon as the issue was resolved, we prioritized the necessary work to move our load balancers to the new data center as well. We finished all required preparations on the same day and started to test the new servers. The actual switchover happened on June 10 between 18:00 and 19:00 CEST without any customer impact. We still have a few components to move to the new data center, but all servers critical to answering your requests are now in the same place again.

Incident on 2021-06-13

On Sunday, June 13 from 16:15 to 16:30 CEST our system was significantly slower than usual and approximately 50 % of requests resulted in an error message or timeout.

This incident started at 16:15 due to a very high number of requests. The peak load was 10 times higher than our usual levels, and 5 times higher than the peaks we've seen in the earlier incident on June 7. Our team was notified by our monitoring system at 16:20 due to failing test purchases. At 16:22, we've started scaling the servers we added on June 8 to maximum capacity as well, which was finished at 16:27. At the same time, the number of requests started getting smaller from 16:23. Around 16:30, full system availability and regular performance was restored.

In this case we've been able to trace the increased load back to high demand on a specific customer's tickets with certainty. This allowed us to react more precisely. We knew it was possible that similar levels of load could be expected in the following days around the same time as well, also allowing us to monitor the situation more closely.

On the same day, June 13, we further increased the number of servers on our application layer by another 66 %. In parallel, we started developing changes to our software increasing performance of the components that caused the most load during this incident.

Incident on 2021-06-14

On Monday, June 14 from 16:15 to 16:25 CEST our system was significantly slower than usual and approximately 50 % of requests resulted in an error message or timeout.

As detailed further above, we've expected a similar load to repeat, however we were hopeful that the significant addition of servers was enough to manage the load. We've started actively monitoring the systems around the expected time, only to find out that the CPUs of our new servers weren't fully utilized and users running into issues again.

After a few minutes, we figured out that a configuration error caused our software to only utilize 12 of the 16 CPU cores our newly added servers provided. Fortunately, the load already decreased starting 16:25, at which time the system as able to handle it again, even though we did not deploy our changes yet.

We did not catch this earlier since we only finished adding the new servers late in the previous night and we did not want to risk running a load test on the production system during business hours. Of course, we immediately fixed the configuration error.

We wanted to be extra sure that there would be no load-related outages any time soon, even if load increased further. In the afternoon of June 14, we've increased the number of severs by another 50 %. At this point, we were now running with 375 % more application server capactiy than just a week before. Additionally, we've deployed the code changes we started working on the previous day, and we ran a couple of tests with simulated load late in the night to be confident the performance would hold for the next peaks.

As a safety guard in case things still go wrong, we also implemented an option to separate the traffic of the client in question from all other clients. We did not need to use that option so far.

Infrastructure changes

We've had now resolved the immediate issue by adding a lot more capacity. The system remained stable for a few days, even though high-load peaks continued to occur on an almost twice per day. However, it took way too many failures to get here and even though we now have a good buffer to handle additional load, we immediately started working on changes prevent this from happening again in the long term.

Load spikes aren't unusual for a ticketing company. While we can run our application on many servers at the same time, there are limits to that approach since we require a shared data store that handles much of the workload, such as making sure we don't sell too many tickets. Additionally, load spikes in ticketing are often very short and steep as well as very hard to predict, thus making it hard to always have the right amount of servers, even with a setup that tries to scale automatically.

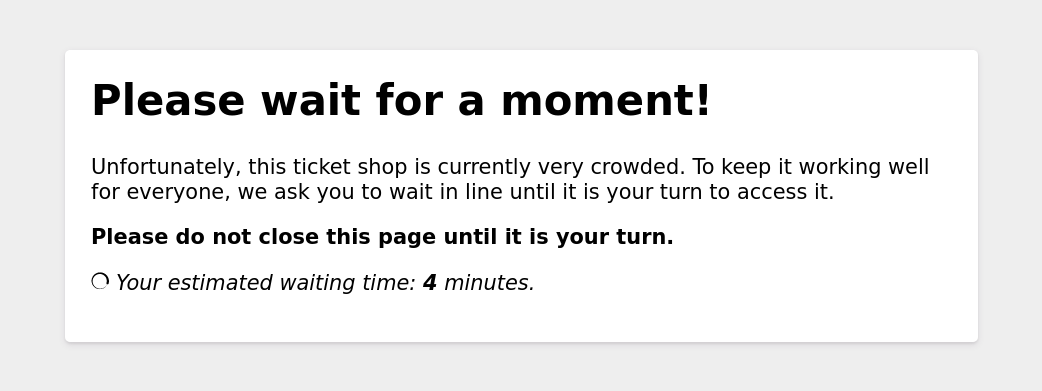

Most ticketing companies deal with load spikes by creating a "virtual waiting room" that puts incoming users in a queue and holds them out of the system for a couple of minutes to distribute the load over a longer time frame. The big benefit of this approach is that it provides a clear communication to end users and doesn't cause frustration due to error pages in the middle of checkout.

We had already built technology to implement such a virtual waiting room back in 2018, and we have used it a couple of times over the years. However, so far these were predictable load spikes (i.e. tickets for a festival going on sale on an announced date) and our process to set up such a virtual waiting room was a manual process. It was automated enough to do it quickly: In 2019 we've helped a pretix Enterprise customer with a virtual waiting room in less than 60 minutes after the customer asked us for help in a high-load situation.

However, due to organizational issues on the side of the client causing the load, we were unable to deploy a virtual waiting room using our existing technology quickly enough to help with the specific situation at hand.

As we grow and gain more customers, unpredicted load spikes are bound to happen more often. While the best outcome is obviously to handle the load spikes by just scaling quickly enough, we need to ensure that a load spike caused by one customer does not impact the availability of the system for other customers.

On Tuesday, June 15, we started working on a significant change to our load balancer setup that allows us to automatically enable virtual waiting rooms for individual customers if load increases beyond a specific threshold. We've finished this work on Saturday, June 19, and started deploying the new system in a "dry-run" mode: It wouldn't yet show any waiting rooms, but record relevant data points to allow us to calibrate the system efficiently.

Incident on 2021-06-21

On Monday, June 21 from 10:37 to 10:53 CEST our system was significantly slower than usual. We're not aware of a significant number of failed requests.

As part of deploying the new load balancer setup with waiting room support, we switched from haproxy to openresty for our TLS termination since openresty gives us the flexibility required to implement such complex behvaior.

It turns out that a bug somewhere between openresty and our own logic caused openresty to use 100 % of available CPU resources after a configuration reload. This bug apparently was only triggered if a large amount of traffic has happened since the previous configuration reload. We did not figure out the bug exactly, but we were able to narrow it down to a specific sub-feature. We re-implemented this feature from scratch using an entirely different approach, after which we were not able to trigger the bug again.

Incident on 2021-06-23

On Wednesday, June 23 from 15:11 to 15:19 CEST our system was fully unavailable.

This incident, again, was unrelated to load or infrastructure changes to our system but was caused by a large-scale network outage at one of our data center providers.

There's not much we can do in such a situation. Our system is only running in one data center at a time to guarantee the best possible data consistency and performance. While we do have emergency plans in case a data center fails permanently, activating them risks losing data on the most recent ticket sales as well as takes time in itself, so we only activate that plan if there's reason to believe that the outage takes longer.

Conlusion

Seven availability incidents in a month is certainly bad. Summing up the incidents, our overall availability in June drops to around 99,8 %. While this is still above our contractual SLA, it's certainly far below our goals. As this blogpost illustrates, in reality there often isn't "one problem", but a complex variety of different issues, partially independent and partially closely intertwined.

As you can see from above, we don't stop with just resolving the incident and bringing the system back up. Instead, we immediately start to make changes to our infrastructure and processes to prevent similar problems. Yes, sometimes these changes can cause new problems on their own, even with careful testing, as the incident from June 21 shows. However, we now run with a much larger server capacity as well as with significantly improved infrastructure that will automatically protect us from many possible peak load scenarios in the future.

Of course, there are still improvements left to do. In the coming weeks, we'll work on our tooling to allow us scaling our systems up and down even more quickly. We're also planning a significant change to our monitoring system and alerting processes to alert our team more quickly in case of a partial failure where the system still works, but returns errors for a significant percentage of incoming requests.

We've also noticed that throughout the incidents, our communication wasn't perfect either. While we quickly responded to all related phone calls and emails, keeping e.g. our public status page up to date often took a long time. We know how annoying it is not knowing what's going on, and we're working on restructuring our internal processes to improve on this part in the future as well.

To close off, we again want to apologize deeply that all of this happened and impacted your business. If you have any questions, feel free to reach out to us directly.